Welcome to the world of testing, where precision meets assurance! In this blog post, we’re taking a stroll through the basics of Test Strategy—a key player in the software testing game. No jargon, no tech babble—just simple words to help you grasp the essentials.

Test Strategy is your roadmap to ensure that software behaves as it should. Imagine it as your trusty guide, outlining the who, what, when, and how of testing.

Whether you’re a novice or looking for a quick refresher, we’ve got you covered. We’ll break down the what and why of Test Strategy, explore its components, and show you how it paves the way for reliable, bug-free software.

So, buckle up as we embark on this journey to unravel the secrets of Test Strategy—because testing isn’t just about finding bugs; it’s about delivering quality software with confidence!

What Is Test Strategy?

A test strategy is like a guideline for testing software that helps ensure it works as intended. It’s important because it guides the testing process, making it organized and effective. Think of it as a plan that tells testers what to test, how to test, and when to test.

Components of a test strategy include defining the scope, which means figuring out what parts of the software to test. Then, there’s the test environment, like the computer setup, needed for testing. Test levels and types are also crucial, outlining the different kinds of testing to be done.

Additionally, it covers the resources required, such as people and tools, and the schedule for testing activities. Lastly, it outlines the entry and exit criteria, determining when testing can begin and when it’s considered complete.

A good test strategy ensures thorough testing, reducing the chances of bugs slipping through and improving overall software quality.

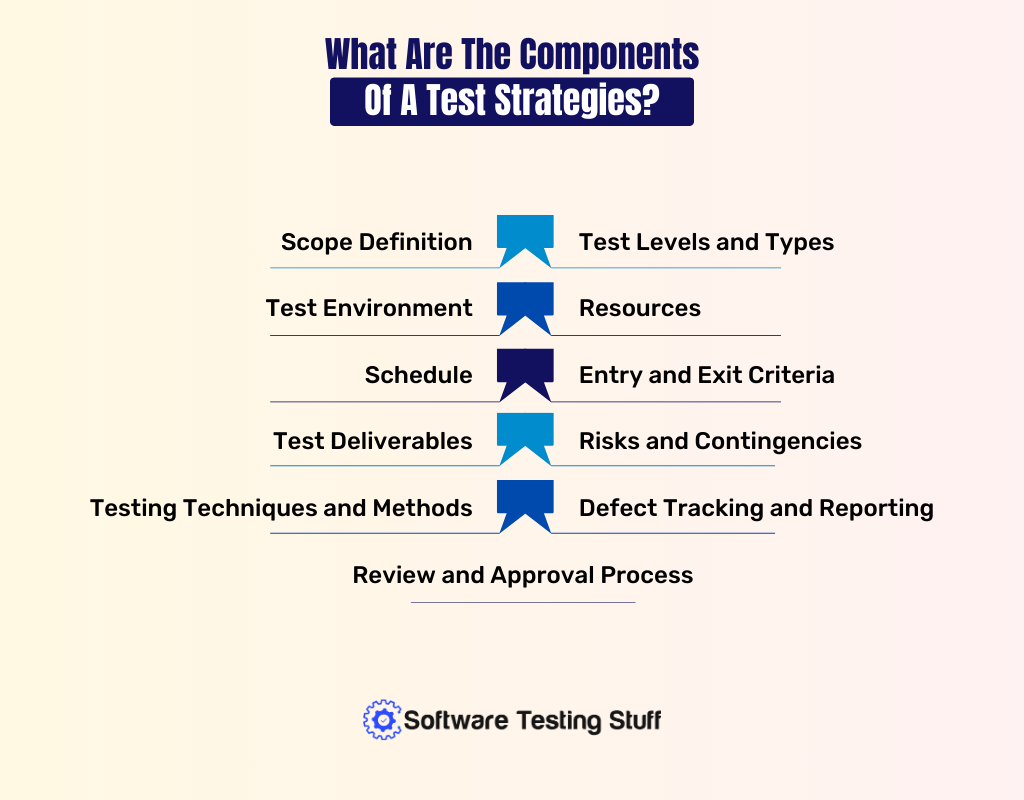

What Are The Components Of A Test Strategies?

A test strategy typically consists of several key components to guide the testing process effectively. These components include:

Scope Definition

Clearly outlining what parts of the software will be tested.

Test Levels and Types

Identifying the various levels (like unit, integration, system) and types (such as functional, performance) of testing to be performed.

Test Environment

Describing the necessary hardware, software, and network setups needed for testing.

Resources

Specifying the human resources, tools, and equipment required for testing.

Schedule

Establishing the timeline for different testing activities.

Entry and Exit Criteria

Defining conditions that must be met for testing to begin (entry criteria) and criteria for testing completion (exit criteria).

Test Deliverables

Listing the documents, reports, and artifacts that will be produced during the testing process.

Risks and Contingencies

Identifying potential risks to the testing process and outlining plans to mitigate them.

Testing Techniques and Methods

Describing the specific approaches and methods that will be used during testing.

Defect Tracking and Reporting

Detailing how defects will be identified, tracked, and reported.

Review and Approval Process

Outlining the procedures for reviewing and obtaining approval for the test strategy.

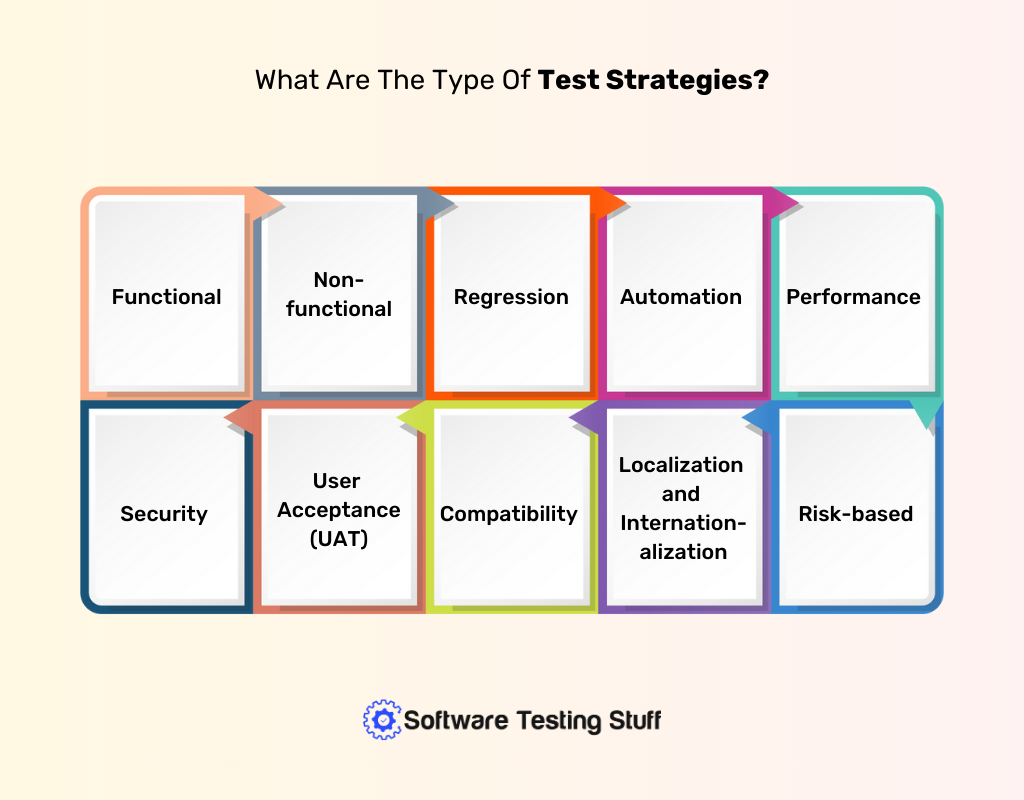

What Are The Type Of Test Strategies?

Test strategies can be broadly categorized into different types based on their focus and objectives. Here are some common types of test strategies:

Functional Test Strategy

Functional testing focuses on verifying that the software functions according to specified requirements. It involves testing the application’s features, user interfaces, APIs, and integrations.

Non-functional Test Strategy

Concentrates on aspects other than the functional requirements, such as performance, usability, reliability, and security. This strategy ensures that the software meets criteria related to these non-functional attributes.

Regression Test Strategy

Aims to ensure that new changes or enhancements to the software do not adversely affect existing functionality. It involves retesting the previously tested features to catch any unintended side effects.

Automation Test Strategy

Focuses on using automated testing tools to streamline and accelerate the testing process. This strategy defines which tests should be automated, the tools to be used, and the overall automation approach.

Performance Test Strategy

Specifically designed to assess how well the software performs under various conditions, such as heavy user loads or high data volumes. It includes strategies for load testing, stress testing, and scalability testing.

Security Test Strategy

Concentrates on identifying and addressing potential vulnerabilities and weaknesses in the software’s security measures. It involves testing for issues like data breaches, unauthorized access, and compliance with security standards.

User Acceptance Test (UAT) Strategy

Outlines the approach to validating whether the software meets the end users’ requirements and expectations. UAT strategies often involve collaboration with actual users to ensure the software aligns with real-world usage.

Compatibility Test Strategy

Ensures that the software functions correctly across different platforms, browsers, devices, and operating systems. This strategy is crucial for applications with a diverse user base.

Localization and Internationalization Test Strategy

Addresses the software’s readiness for different languages and regions. Localization focuses on adapting the software for a specific locale, while internationalization ensures that the software can be easily adapted to different locales.

Risk-based Test Strategy

Prioritizes testing efforts based on the perceived risks associated with different features or components. It involves identifying high-risk areas and allocating testing resources accordingly.

What Is Test Strategy Selection?

Test strategy selection involves the process of choosing the most appropriate and effective test strategy for a particular software development project. It is a critical decision-making step that considers various factors to ensure that the testing approach aligns with the project’s goals, requirements, and constraints.

The selection of a suitable test strategy is essential for achieving thorough test coverage and delivering high-quality software. Here are the key aspects involved in test strategy selection:

Project Characteristics

Analyzing the unique characteristics of the project, such as its size, complexity, and nature of the application, to determine the most suitable testing approach.

Project Objectives

Understanding the specific goals of the project, including functional requirements, performance expectations, and non-functional attributes, to tailor the test strategy accordingly.

Project Constraints

Consider any constraints that might impact testing, such as time limitations, budget constraints, or resource availability, and select a strategy that can operate within these constraints.

Risk Analysis

Identifying potential risks associated with the project and selecting a test strategy that effectively addresses and mitigates these risks. For instance, a riskier project may require a more comprehensive and iterative testing approach.

Regulatory and Compliance Requirements

Taking into account any industry-specific regulations or compliance standards that the software must adhere to and selecting a test strategy that ensures compliance.

Development Methodology

Aligning the test strategy with the chosen development methodology (e.g., Agile, Waterfall) to ensure testing activities are integrated seamlessly into the overall development process.

Resource Availability

Considering the availability and skill set of testing resources, including human testers and testing tools, and selecting a strategy that optimally utilizes these resources.

Customer Expectations

Understanding customer expectations and user needs to select a test strategy that focuses on areas most critical to end users. This may involve user acceptance testing or usability testing strategies.

Test Environment

Evaluating the complexity and requirements of the test environment and selecting a strategy that accommodates the necessary configurations and setups.

Feedback Mechanism

Establishing a mechanism for continuous feedback and improvement throughout the testing process. This may involve periodic reviews and adjustments to the test strategy based on feedback from testing activities.

Budget and Cost Considerations

Considering the budget allocated for testing activities and selecting a strategy that provides the best value within the specified financial constraints.

What Are The Differences Between Test Strategy And Test Plan?

Test strategy and test plan are two distinct documents in the software testing process, each serving a specific purpose. Here are the key differences between test strategy and test plan:

Purpose

Test Strategy: It is a high-level document that outlines the overall approach to testing. It defines the testing objectives, methods, and guidelines for the entire project.

Test Plan: It is a detailed document that provides a roadmap for the testing process. It outlines the specific activities, resources, and schedule for each phase of testing.

Scope

Test Strategy: It has a broader scope and focuses on the entire project. It covers aspects like test levels, test types, and overall testing approach.

Test Plan: It has a narrower scope, focusing on a specific test level or phase of testing. It details the activities, resources, and schedule for that particular testing phase.

Level of Detail

Test Strategy: It is less detailed and serves as a guideline for creating more detailed test plans.

Test Plan: It is highly detailed, specifying the test scenarios, test cases, test data, and the execution schedule for each testing phase.

Timeframe

Test Strategy: It is typically created early in the project lifecycle, often during the planning phase, and is relatively stable throughout the project.

Test Plan: It is created as testing activities progress and may be updated regularly to reflect changes in project requirements or testing needs.

Content

Test Strategy: It includes information on test levels, test types, entry and exit criteria, resources, and high-level test schedules.

Test Plan: It includes detailed information on test cases, test scripts, test data, test environment setup, responsibilities, and the schedule for each testing phase.

Audience

Test Strategy: It is primarily for project stakeholders, providing an overview of the testing approach and goals.

Test Plan: It is for the testing team, development team, and other project stakeholders involved in the specific testing phase.

Flexibility

Test Strategy: It is relatively stable and may undergo fewer changes throughout the project.

Test Plan: It is more dynamic and subject to frequent updates as testing progresses and project requirements evolve.

What Are The Details Included In The Test Strategy Document?

A Test Strategy document is a high-level document that outlines the overall approach to testing within a software development project. While the specific details can vary based on the project’s needs and complexity, a typical Test Strategy document includes the following key details:

Introduction

Brief overview of the document, its purpose, and its intended audience.

Objective

Clearly defined testing objectives, including what the testing process aims to achieve.

Scope

Description of the features or functionalities to be tested, as well as any exclusions or areas that won’t be tested.

Test Levels

Identification and description of the different testing levels (e.g., unit testing, integration testing, system testing, acceptance testing) that will be conducted.

Test Type

Specification of the types of testing to be performed (e.g., functional testing, performance testing, security testing) and their goals.

Testing Techniques

Overview of the testing techniques and methods that will be employed, such as manual testing, automated testing, or a combination of both.

Test Deliverables

List of documents and artifacts that will be produced during the testing process, including test plans, test cases, and test reports.

Test Environment

Description of the test environment, including hardware, software, network configurations, and any other dependencies required for testing.

Entry and Exit Criteria

Clearly defined conditions that must be satisfied before testing can commence (entry criteria) and criteria that indicate when testing is considered complete (exit criteria).

Test Schedule

Overview of the testing timeline, including milestones, deadlines, and any dependencies on other project activities.

Resource Planning

Allocation of human and technical resources for testing, including roles and responsibilities of team members.

Risks and Contingencies

Identification of potential risks that could impact the testing process, along with contingency plans and mitigation strategies.

Testing Tools

Specification of testing tools and software that will be used to facilitate the testing process, including both manual and automated tools.

Defect Tracking and Reporting

Procedures for identifying, tracking, and reporting defects, including the format for defect reports and the workflow for defect resolution.

Review and Approval Process

Explanation of how the test strategy document will be reviewed, approved, and updated throughout the project lifecycle.

Dependencies

Identification of any external dependencies that may impact the testing process, such as third-party services or external systems.

Training

Plans for training team members on the testing processes, tools, and methodologies.

Conclusion

A solid Test Strategy is like a guiding star for the testing journey, ensuring a smooth and effective ride through the software development landscape. By clearly outlining objectives, identifying risks, and providing a roadmap for testing activities, it acts as a beacon of clarity for the entire team.

Remember, it’s not just a document but a powerful tool that aligns everyone towards the common goal of delivering high-quality software. As you embark on your testing adventure, keep in mind that flexibility and adaptability are key companions.

Regularly revisit and update your strategy to accommodate changes and challenges along the way. With a well-crafted Test Strategy in hand, you’re not just testing; you’re paving the way for a robust, reliable, and successful software journey. Happy testing!

Frequently Asked Questions

Can a Test Strategy be modified during the course of a project?

Yes, a Test Strategy is not static. It should be flexible to accommodate changes in project requirements, emerging risks, or shifts in priorities. Regular reviews and updates ensure that the strategy remains aligned with project goals.

How does a Test Strategy contribute to improved software quality?

A well-defined Test Strategy promotes thorough testing, early defect detection, and efficient use of resources. By addressing risks, ensuring comprehensive test coverage, and fostering collaboration, it significantly contributes to the delivery of high-quality software.

What factors should be considered when developing a Test Strategy?

Key considerations include project objectives, scope, risk analysis, regulatory requirements, development methodology, resource availability, and constraints such as time and budget. These factors help tailor the Test Strategy to the unique needs of the project.

- Best Client Management Software Tools for Agencies - July 21, 2025

- How a Gamertag Generator Helps Build Your Gaming Identity - July 3, 2025

- Namelix: Business Name Generator Tool - May 20, 2025